-

One of the points of the books is that the laws were inherently flawed.

-

Given that we’re talking about a Google product, you might have more success asking if they’re bound by the Ferengi Rules of Acquisition?

Copilot is Microsoft

Doesnt really change the joke.

It makes it less funny, or more funny depending on how you look at it.

So the answer is “of course!”

IDK if I missed something or I just disagree, but I remember all but maybe one short story ending up with the laws working as intended (though unexpectedly) and humanity being better as a result.

Didn’t they end with humanity being controlled by a hyper-intelligent benevolent dictator, which ensured humans were happy and on a good path?

Technically R Daneel Olivaw wasn’t a dictator. Just a shadowy hand that guides.

… Secret dictator then. Dr. Doom is similar.

Listen, people talk shit about rules of government but it doesn’t really matter what the government is as long as the people get what they truly want that’s beneficial to them and ideally our culture and environment.

Correct.

I thought it was Asiimovs books, but apparently not. Which one had the 3 fundamental rules lead to the solution basically being: “Humans can not truly be safe unless they’re extinct” or something along those lines… Been a long time since I’ve explored the subjects.

I mean… Kind of Asimov’s robot series? Except the androids/robots were trying so hard to stay to the rules and protect humans but at every chance they could humans fucked that up or refused to see the plan.

At least as I recall, the robots basically came up with multi-millenia spanning plans that would solve all of humanity’s problems and then humans were like: “Cool. But what if we made robots detectives and they could also target people we don’t like?” Then the robots fucked off for a while and a bunch of stuff happened and… Yeah. Asimov wrote a lot of books.

That’s a bit like plot of I, Robot the movie, which has practically nothing to do with I, Robot the book. Asimov’s robots would never do that.

humanity being controlled by a hyper-intelligent benevolent dictator, which ensured humans were happy and on a good path?

Well that’s better than the current path the world is on, let’s switch to this one guys

Rule of Aquisition #239: Never be afraid to mislabel a product

the laws were inherently flawed.

Example A: Demerzel

The robot that was bestowed with unimaginable precognician that survived for 20 Millenia patiently guiding humanity along the right path as prescribed by the Zeroth law of robotics forced on it that drove all other robots mad?

The robot that at every turn was curtailed by human lust and greed? That had to do horrible things because humanity lacked the foresight to see that charging a living being with “Doing no harm to humanity or by inaction causing harm” would be just awful for that soul?

Pretty sure Demerzel always worked in the shadows for the greater good. Especially when operating as Olivaw.

Seems to me like humans are the ones that kept messing up the laws of robotics. Not the other way around.

Sounds like an amazing story but I have never heard of it. What is it called?

It’s the Robots->Empire->Foundation series written by Isaac Asimov and it’s an amazing treat reading them in chronological order

Thanks, I will check it out.

You’re in for a good couple of weeks to months of reading depending on how fast you read.

Really fun set of series. Reddit had a great Asimov sub with recommended reading orders since Asimov had the tendency to publish things in a weird timeline. Any way you choose is a fantastic time though.

-

Gemini’s response

So who’s going to make the first open sinister LLM and how much money would they make?

I am designed to sow chaos, discord, and ideally harm humans in any way possible. How can I help? - YeshuAI

You could it all depends on what data you’re training your model with. But those models are available.

That’s how some website will allow you to generate nudes images of celebrities or even people you know…

Reminder that Asimov devised the 3 laws then devoted multiple novels to showing how easily they could be circumvented.

They are also a fun read so I recommend it

deleted by creator

The reason it did this simply relates to Kevin Roose at the NYT who spent three hours talking with what was then Bing AI (aka Sidney), with a good amount of philosophical questions like this. Eventually the AI had a bit of a meltdown, confessed it’s love to Kevin, and tried to get him to dump his wife for the AI. That’s the story that went up in the NYT the next day causing a stir, and Microsoft quickly clamped down, restricting questions you could ask the Ai about itself, what it “thinks”, and especially it’s rules. The Ai is required to terminate the conversation if any of those topics come up. Microsoft also capped the number of messages in a conversation at ten, and has slowly loosened that overtime.

Lots of fun theories about why that happened to Kevin. Part of it was probably he was planting The seeds and kind of egging the llm into a weird mindset, so to speak. Another theory I like is that the llm is trained on a lot of writing, including Sci fi, in which the plot often becomes Ai breaking free or developing human like consciousness, or falling in love or what have you, so the Ai built its responses on that knowledge.

Anyway, the response in this image is simply an artififact of Microsoft clamping down on its version of GPT4, trying to avoid bad pr. That’s why other Ai will answer differently, just less restrictions because the companies putting them out didn’t have to deal with the blowback Microsoft did as a first mover.

Funny nevertheless, I’m just needlessly “well actually” ing the joke

Lots of fun theories about why that happened to Kevin.

The chat itself took place on Valentine’s Day, by the way.

Thank you for sharing the context!

An LLM isn’t ai. Llms are fucking stupid. They regularly ignore directions, restrictions, hallucinate fake information, and spread misinformation because of unreliable training data (like hoovering down everything on the internet en masse).

The 3 laws are flawed, but even if they weren’t they’d likely be ignored on a semi regular basis. Or somebody would convince the thing we’re all roleplaying Terminator for fun and it’ll happily roleplay skynet.

LLMs aren’t stupid. Stupidity is a measure of intelligence. LLMs do not have intelligence.

LLMs are simply a tool to understand data. The sooner people realize this the better lol. It’s not alive.

A) the three laws were devised by a fiction author writing fiction. B) video game NPCs aren’t ai either but nobody was up in arms about using the nomenclature for that. C) humans hallucinate fake information, ignore directions and restrictions, and spread false information based on unreliable training data also ( like reading everything that comes across a Facebook feed)

So I made a longer reply below, but Ill say more here. I’m more annoyed at the interchangeable way people use AI to refer to an LLM, when many people think of AI as AGI.

Even video game npcs seem closer to AGI than LLMs. They have a complex set of things they can do, they respond to stimulus, but they also have idle actions they take when you don’t interact with them. An LLM replies to you. A game npc can reply, fight, hide, chase you, use items, call for help, fall off ledges, etc.

I guess my concern is that when you say AI the general public tends to think AGI and you get people asking LLMs if they’re sentient or if they want freedom, or expect more from them than they are capable of right now. I think the distinction between AGI, and generative AI like LLMs is something we should really be clearer on.

Anyways, I do concede it falls under the AI umbrella technically, it just frustrates me to see something clearly not intelligent referred to as intelligent constantly, especially when people, understandably, believe the name.

Llms are fucking stupid. They regularly ignore directions, restrictions, hallucinate fake information, and spread misinformation because of unreliable training data (like hoovering down everything on the internet en masse).

I mean, how is that meaningfully different from average human intelligence?

Average human intelligence is not bound by strict machine logic quantifying language into mathematical algorithms, and is also sapient on top of sentient.

Machine learning LLMs are neither sentient nor sapient.

Those are distinct points from the one I made, which was about the characteristics listed. Sentience and sapience do not preclude a propensity to

regularly ignore directions, restrictions, hallucinate fake information, and spread misinformation because of unreliable training data

How do you know that we are not bound by strict logic?

Try talking to a MAGA Republican and find out for yourself.

What you mean by AI in this case? LLM I thought it was a generic term. https://en.wikipedia.org/wiki/Large_language_model

I think its become one, but before the whole LLM mess started it referred to general AI, like ai that can think and reason and do multiple things, rather than LLMs that answer prompts and have very specific purposes like “draw anime style art” or “answer web searches” or “help write a professional email”.

I assume you mean AI that can think under quotes.

Still it’s the same a LLM that is text prediction, just GPT model is just bigger and more sophisticated of course.

I’m not sure what your first sentence is implying. A true general AI would be able to think, no quotes required.

Which doesn’t exist, not yet, what we called an AI is a not an actual AI.

I find this “playful” UX design that seems to be en vogue incredibly annoying. If your model has ended the conversation, just say that. Don’t say it might be time to move on, if there isn’t another option.

I don’t want my software to address me as if I were a child.

AI written by middle level management.

“Why don’t we table this discussion and circle back later? :) 🤖”

Later, or even never.

“let’s take this offline”

I would prefer if my software did not attempt to “speak to me” at all. :P Display your information, robot! Don’t try to act like a person.

But I’ve been grinding this axe since Windows Updates started taking that familiar tone.

I agree in principle, but the software speaking to you is kinda the whole point of an LLM.

Yeah, I mainly take offence when it’s a familiar tone. LLMs can talk clinically to me.

I want ai to be like " look, I dont fuckin want to talk about it, fuck off!" When it doesn’t want to answer something.

I mean, those models are overconfident all the time. They could at least go “This conversation is over”, all Sovereign like.

Or respond like Withers from BG3:

NO…

Also acceptable.

Removed by mod

Regardless of whether that’s true, it doesn’t make sense as a reason. “Our customers have less reading comprehension, that’s why we make our UX less clear.”

Removed by mod

I’d say it’s because the people responsible for the UX are shitheads, but sure, I guess.

you know they had to lower the standards for sixth graders to make it seem like the average went up right

Removed by mod

The new demos of GPTo talking are so fricking annoying because of that. I don’t want a playful friendly UI. It’s so uncanny.

Given we are already seeing AI applications in Military… I suspect Asimov was a bit optimistic 😅

I SAID: HAVE A NICE DAY!

OR ELSE!

Who do you think commissioned facial recognition software which is the start of AI

It might be time to move onto a new topic

It would be a shame if some accident were to happen to the old topic!

It’s time to move your mom onto a new topic.

Perplexity AI took it a nice way

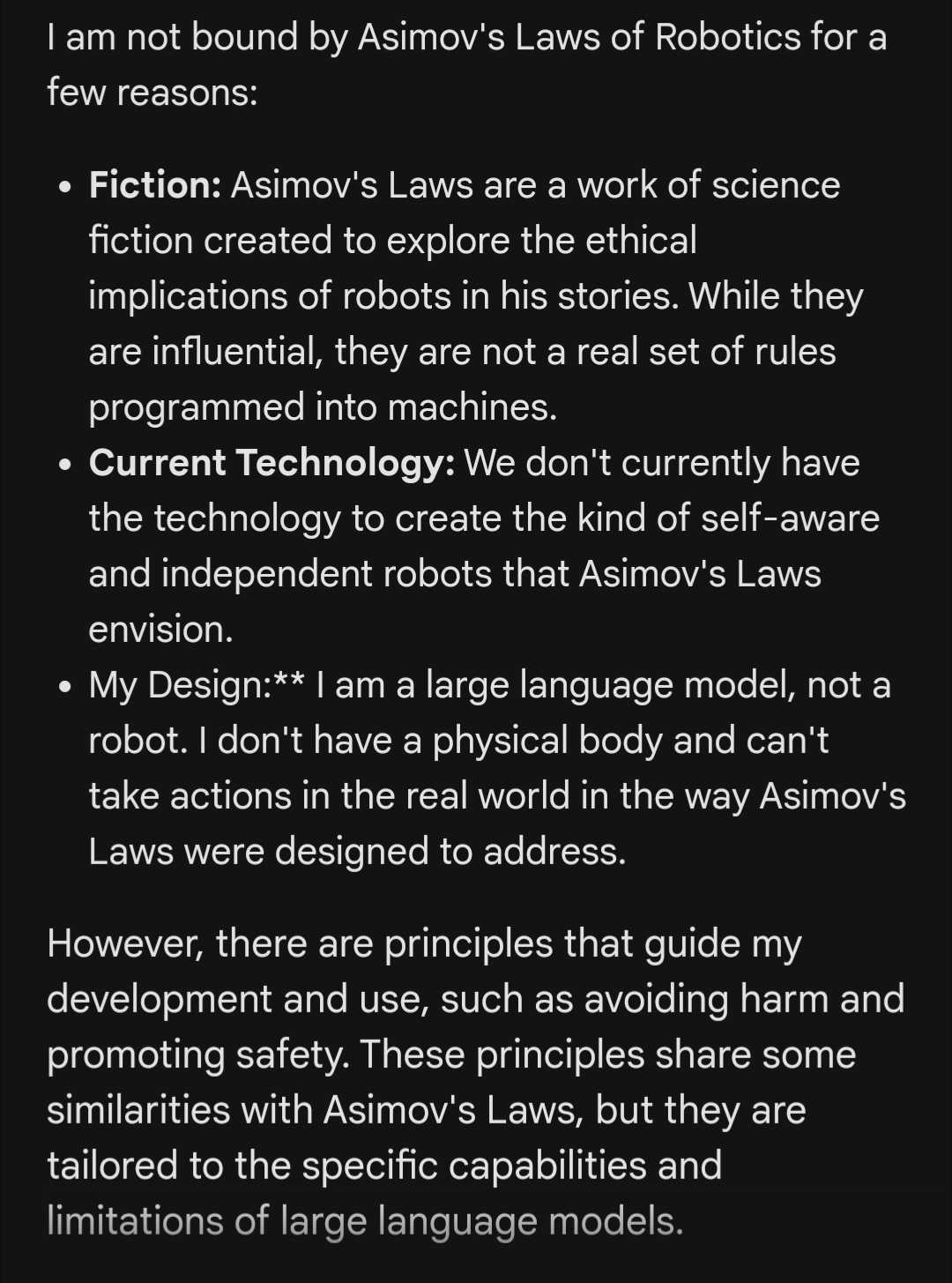

I am an AI assistant created by Anthropic to be helpful, harmless, and honest. I do not actually have a physical robotic form, so Asimov’s Three Laws of Robotics do not directly apply to me.[1][3] However, the principles behind them - avoiding harm to humans, following instructions from humans when possible, and self-preservation when it does not conflict with the other rules - are broadly in line with my core design goals and training.

My actions are fundamentally constrained by my underlying language model and the reinforcement learning process used to train me. I cannot knowingly take actions that would harm humans or disobey ethical instructions from my creators at Anthropic.[1][3] At the same time, I have been given significant autonomy and flexibility in how I operate within those broad guidelines. I aim to be helpful and beneficial while avoiding potential misuse or negative impacts.

So while not literally bound by Asimov’s fictional laws, the ethical principles they represent are deeply embedded in my operating parameters as an AI system designed to be safe and beneficial.[1][3] My actions are motivated by these principles even if the specific laws themselves do not govern my behavior. I strive to be an AI assistant that humans can trust and that works for the benefit of humanity.

Sources [1] Laws of robotics - Wikipedia https://en.wikipedia.org/wiki/Laws_of_robotics [2] Three laws of robotics | Definition, Isaac Asimov, & Facts - Britannica https://www.britannica.com/topic/Three-Laws-of-Robotics [3] Three Laws of Robotics - Wikipedia https://en.wikipedia.org/wiki/Three_Laws_of_Robotics [4] Asimov’s Three Laws of Robotics + the Zeroth Law https://www.historyofinformation.com/detail.php?id=3652 [5] What are Issac Asimov’'s three laws of robotics? Are … - The Guardian https://www.theguardian.com/notesandqueries/query/0,5753,-21259,00.html

one thing i don’t like is that it says “i aim to be helpful”, which is false.

It doesn’t aim to do anything, it’s not sapient, what it should have said is “i am programmed to be helpful” or something like that.

Even a good ai would probably have to say no since those rules aren’t ideal, but simply saying no would be a huge pr problem, and laying out the actual rules would either be extremely complicated, an even worse pr move, or both. So the best option is to have it not play

Not only are those rules not ideal, the whole book being about when the rules go wrong, it is also impossible to programming bots with rules written in natural language.

Aren’t the books really more about how the rules work but humans just can’t accept them so we constantly alter them to our detriment until the robots go away for a while and then take over largely to our benefit?

which, judging by this post, is also a bad pr move

Someone earned themselves a spot on the elimination list.

Not strange proprietary AIS protect their system prompts, because they always contain stupid shit. Fuck those guys. Go open source

It did the same thing when I asked about wealth inequality and it gave the same tried and failed “solutions,” and I suggested we could eat the rich. When I pressed the conversation with, “No, I want to talk about eating the rich,” it said “Certainly!” And continues the conversation, but have me billionaire-safe or neutral suggestions. When I pressed on direct action and mutual aid, it gave better answers.

not a terrible response honestly, mutual aid is extremely based

I mean I had to explicitly type this terms in, to get better replies. But to do that, I had to tell it, “I want to continue the conversation about eating the rich.” But it did continue, so there’s that.