there is no way in hell a 2014 computer is able to run modern games on medium settings at all, let alone running well. my four year old computer (Ryzen 5 4000, GTX 1650, 16 GB RAM) can barely get 30-40 fps on most modern games at 1080p even on the absolute lowest settings. don’t get me wrong, it should still work fine. however, almost no modern games are optimized at all and the “low” settings are all super fucking high now, so anon is lying out of his ass.

It says the story took place in 2020. And that it played “Most games” on medium settings. 30-40 fps is playable to a lot of people. I’m inclined to believe them.

If you don’t upgrade to Windows 11, you can’t use Recall, which is a great reason not to upgrade to Windows 11.

I upgraded to Linux. It worked out well for me since I mostly pay retro games and games from yesteryear.

I upgraded a Chromebook to Linux recently. That was a huge bump in performance that I wasn’t expecting, not even just for gaming.

What OS was it running before? ChroomeOS is Linux right?

Technically yes, and so is Android. But neither work the way you’d expect a typical Linux distro to work.

Yeah it was ChromeOS. It is sort of linux, but google is an advertising company. You can’t ask them to not collect your data and recently they gave up pretending like they cared about user privacy. Linux is none of that. Complete opposite.

If you compare it to linux side by side, chromeOS is basically the alternate reality evil twin with the goatee

What distro? Did you follow a guide?

installed Lubuntu 24 on it using a guide that loosely applied to the low end chromebook I have. Link here

Using chrome browser on ChromeOS was snappy but any other browser I used with addons was an awful and laggy experience. The difference in performance was an unexpected win, but I primarily did it to ditch SpywareOS.

Going forward I’m probably going to just look for chromebooks to convert to linux for a daily driver laptop because you dont have to pay a premium for the spyware like you do with a windows laptop

I upgraded to Linux and can still play every game I’ve tried to play

If you want to stay with Windows for whatever reason, even 11, I can recommend Revision Playbook. It locks your installation and scrapes out the crap like unwanted updates and features like AI bullshit, Edge, Telemetry and whatnot. You can even manually install Apps from the Store without the Store if you like to. Security patches and selective updates come only via manual download from MS catalogue in my case, but you can automate this too with some tools.

People want shiny new things. I’ve had relatives say stuff like “I bought this computer 2 years ago and it’s getting slower, it’s awful how you have to buy a new one so quickly.” I suggest things to improve it, most of which are free or very cheap and I’d happily do for them. But they just go out and buy a brand new one because that’s secretly what they wanted to do in the first place, they just don’t want to admit they’re that materialistic.

Maybe your relatives don’t like you. It’s a petty but valid reason to ignore perfectly good advice.

People live in times of historic standstill. Society barely develops in a meaningful and hopeful way. Social relationships stagnate or decline. So they look for a feeling of progress and agency in participation in the market and consuming.

They don’t realize this because they aren’t materialistic enough, in a sense that they don’t analyse their condition as a result of political and cultural configuration of their lives so that real agency seems unavailable

They’re invested in PC gaming as social capital where the performance of your rig contributes to your social value. They’re mad because you’re not invested in the same way. People often get defensive when others don’t care about the hobbies they care about because there’s a false perception that the not caring implies what they care about is somehow less than, which feels insulting.

Don’t yuck others’ yum, but also don’t expect everyone to yum the same thing.

Very well put! I’d also add that most people aren’t even really conscious that that’s the reason that they’re mad. There’s ways to express your negative opinion without stating it as a fact or downplaying the other person’s taste.

I’m very certain Anon isn’t just saying “nah, my rig works” to them when asked.

Maybe closer to “LMAO normies wasting money. fuckin coomsumers, upgrading for AAAA slop! LMFAO” into conversations they weren’t invited to.

I use a gaming laptop from 2018. Rog Zephyrus.

fan started making grating noise even after thorough cleaning, found a replacement on Ebay and boom back in business playing Hitman and Stardew.

Will I get 120 fps or dominate multiplayer? nah. But yeah works fine. Might even be a hand me down later on.

Absolutely it totally depends on what you got originally. If you only got an okay ish PC in 2018 then it definitely still won’t be fit for purpose in 2025, but if you got a good gaming PC in 2018 it probably will still work in another 5 years, although at that point you’ll probably be on minimum settings for most new releases.

I would say 5 to 10 years is probably the lifespan of a gaming PC without an upgrade.

However my crappy work laptop needs replacing after just 3 years because it was rubbish to start with.

It depends on what gaming you do. My 10 year old PC with 6 year old GPU plays Minecraft fine.

My other “new PC” is a mini PC with Nvidia 1080 level graphics and it plays half life Alyx fine.

We replaced my mom’s warcraft machine 3 years ago. It replaced an athlonII from 2k7 at 14 years old. Your tank may be a 74yo grandmother so be nice.

I want to talk about writing 2k7 instead of 2007. It does save a character, but I also had to read it 3 times to understand lol but that might be a me problem

Also: do you only do that for 2000-2009, or do you write 2k25?

Also, Also: hope this doesn’t come across as rude. Ive never seen it written that way & find it interesting and a little funny.

Also, also, also: I think it’s sweet you helped your mom upgrade her computer so she could play WoW more effectively.

Oh yeah that’s what it means, I thought 2k7 was a company I’d never heard of.

my mom’s warcraft machine

We truly are living in the future

And even then, a few strategic upgrades of key components could boost things again. New gfx card, a better SSD, more/faster RAM, any of those will do a lot.

High end gaming laptops are about a 5 year cycle, presuming you want everything ultra or high settings.

If you don’t care, my old laptop with a 7700k and a 1070 still runs almost anything, just not as well as brand new top end.

I built an overkill PC in February 2016, it was rocking a GTX 980ti a little before the 1080 came out, and it was probably the best GPU out there, factory overclocked and water cooled by EVGA. My CPU was an i5-4690k, which was solidly mid range then, but I overclocked it myself from 3.5GHz to 5.3Ghz with no issue, and only stopped there because I was so suspicious of how well it was handling that massive increase. I had 2TB of SSD spaceand like 8TB of regular hard drives and 16GB of ram.

Because I have never needed to think about space, and so many of my parts were really overpowered for their generation, I have always been hesitant to upgrade. I don’t play the newest games either, I still get max settings on Doom Eternal and Read Dead 2 which I forget are half a decade old. The only game where it’s struggled in low settings is Baldurs Gate 3 unfortunately, which is made me realise it’s ready to upgrade.

I use an ultrabook from 2017 to play Minecraft sometimes.

The computer I built in 2011 lasted until last summer. I smiled widely when I came to tell my wife and my friend, where my friend then asked why I was smiling when my computer no longer worked.

“Because now he can buy a new one” my wife quickly replied 😁

This makes me wonder how long my build from last year should last me.

I’m rocking a Ryzen 2700x since 2018, or early 2019, and it’s still working like a champ. Granted Cities Skylines 2 is a bit much for it but I’ve been playing Baulders Gate and Helldivers with about a 100 fps average.

That’s good to hear then.

I have a Ryzen 5 5800x, 32GB 3600 RAM, and a 4060 TI. And mainly play Minecraft, Factorio, and most recently RDR2. So should last forever lol

5700x,5700x3d may be an upgrade path with your existing mobo. They were $100-190 a month ago.

How big was your city when you noticed performance issues? I’m near or at 100k on 5700x3d and can play at 3x speed smoothly. When I had a 2700x I noticed lag a lot quicker than 100k but that was much nearer to launch.

Mine is from 2011 and still going strong. It had some upgrades like extra ram, ssd and a new gpu a couple of years ago and I had to replace the front fan. It starts making a horrible noise about 4 hours into a gaming session with a graphically demanding game, but apart from that it runs perfectly fine. I don’t really play demanding games usually so I don’t really care. When it finally dies, I might just swap out the motherboard and cpu and keep the rest. It’s my personal ship of Theseus.

One upside of AAA games turning into unimaginative shameless cash-grabs is that the biggest reason to upgrade is now gone. My computer is around 8 years old now. I still play games, including new games - but not the latest fancy massively marketed online rubbish games. (I bet there’s a funner backronym, but this is good enough for now.)

How about the CASH abbreviation?

Created, Acquired, Stocks, Horseshit

The order in which they develop.

I’m still pushing a ten year old PC with an FX-8350 and a 1060. Works fine.

I didn’t think of my computer as old until I saw your comment with ten years and it’s gpu in the same sentence. When did that happen??

We reached the physical limits of silicon transistors. Speed is determined by transistor size (to a first approximation) and we just can’t make them any smaller without running into problems we’re essentially unable to solve thanks to physics. The next time computers get faster will involve some sort of fundamental material or architecture change. We’ve actually made fundamental changes to chip design a couple of times already, but they were “hidden” by the smooth improvement in speed/power/efficiency that they slotted into at the time.

My 4 year old work laptop had a quad core CPU. The replacement laptop issued to me this year has a 20-core cpu. The architecture change has already happened.

I’m not sure that’s really the sort of architectural change that was intended. It’s not fundamentally altering the chips in a way that makes them more powerful, just packing more in the system to raise its overall capabilities. It’s like claiming you had found a new way to make a bulletproof vest twice as effective, by doubling the thickness of the material, when I think the original comment is talking about something more akin to something like finding a new base material or altering the weave/physical construction to make it weigh less, while providing the same stopping power, which is quite a different challenge.

Except the 20 core laptop I have draws the same wattage as the previous one, so to go back to your bulletproof vest analogy, it’s like doubling the stopping power by adding more plates, except the all the new plates weigh the same as and take up the same space as all the old plates.

A lot of the efficiency gains in the last few years are from better chip design in the sense that they’re improving their on-chip algorithms and improving how to CPU decides to cut power to various components. The easy example is to look at how much more power efficient an ARM-based processor is compared to an equivalent x86-based processor. The fundamental set of processes designed into the chip are based on those instruction set standards (ARM vs x86) and that in and of itself contributes to power efficiency. I believe RISC-V is also supposed to be a more efficient instruction set.

Since the speed of the processor is limited by how far the electrons have to travel, miniaturization is really the key to single-core processor speed. There has still been some recent success in miniaturizing the chip’s physical components, but not much. The current generation of CPUs have to deal with errors caused by quantum tunneling, and the smaller you make them, the worse it gets. It’s been a while since I’ve learned about chip design, but I do know that we’ll have to make a fundamental chip “construction” change if we want faster single-core speeds. E.G. at one point, power was delivered to the chip components on the same plane as the chip itself, but that was running into density and power (thermal?) limits, so someone invented backside power delivery and chips kept on getting smaller. These days, the smallest features on a chip are maybe 4 dozen atoms wide.

I should also say, there’s not the same kind of pressure to get single-core speeds higher and higher like there used to be. These days, pretty much any chip can run fast enough to handle most users’ needs without issue. There’s only so many operations per second needed to run a web browser.

I think I added the 1060 later if that helps :D

i am also using ~10 year old pc but mine is kinda lower end compared to yours

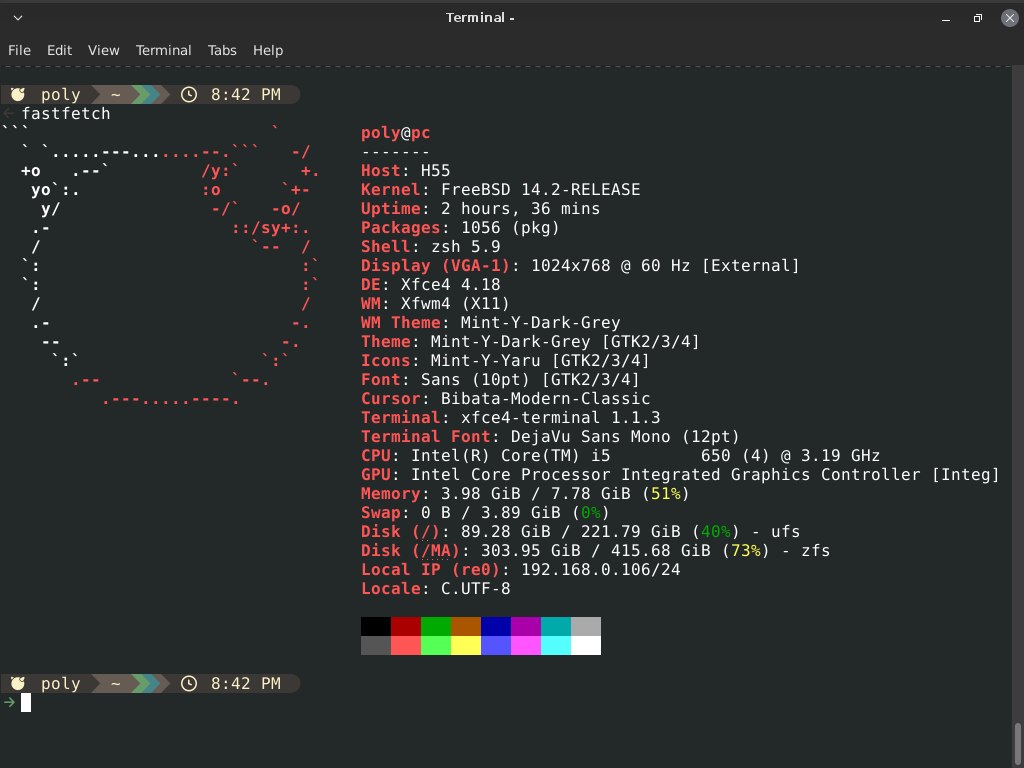

i am also using ~10 year old pc but mine is kinda lower end compared to yoursGenuine curiosity… Why BSD?

Also… There were significant improvements with intel Sandy bridge (2xxx series) and parent is using an equivalent to that. Sandy+ (op seems to be haswell or ivy bridge) is truly the mark of -does everything-… I’ve only bothered to upgrade because of CPU hungry sim games that eat cores.

https://unixdigest.com/articles/technical-reasons-to-choose-freebsd-over-linux.html

mine is clarkdale btw just fyi

besides that linux just doesn’t support my hardware, in all the distros in all the kernels heck even live arch iso there is this weird issue where the pc randomly freezes with those weird screen glitches randomly and the only option to make it work again is force hard reboot

besides that linux just doesn’t support my hardware, in all the distros in all the kernels heck even live arch iso there is this weird issue where the pc randomly freezes with those weird screen glitches randomly and the only option to make it work again is force hard reboot

Same here!

My trusty backup is still an FX8320, the main is an I7-8700k with 1070ti

And it keeps you warm during cold snaps!

If people are pushing you to buy stuff, they are not friends. Do not listen to them.

No, no see sir we are great friends!

Now let me tell you about this great $20,0000 Flatscreen that i get 30% commission on (welcome to bestbuy circa 2000)

(This is satire)

They’re mad they spent 1k$ on a gpu and still can’t do 4k without upscaling on the newest crapware games

Yeah, I’m with you anon. Here’s my rough upgrade path (dates are approximate):

- 2009 - built PC w/o GPU for $500, only onboard graphics; worked fine for Minecraft and Factorio

- 2014 - added GPU to play newer games (~$250)

- 2017 - build new PC (~$800; kept old GPU) because I need to compile stuff (WFH gig); old PC becomes NAS

- 2023 - new CPU, mobo, and GPU (~$600) because NAS uses way too much power since I’m now running it 24/7, and it’s just as expensive to upgrade the NAS as to upgrade the PC and downcycle

So for ~$2200, I got a PC for ~15 years and a NAS (drive costs excluded) for ~7 years. That’s less than most prebuilts, and similar to buying a console each gen. If I didn’t have a NAS, the 2023 upgrade wouldn’t have had a mobo, so it would’ve been $400 (just CPU and GPU), and the CPU would’ve been an extreme luxury (1700 -> 5600 is nice for sim games, but hardly necessary). I’m not planning any upgrades for a few years.

Yeah it’s not top of the line, but I can play every game I want to on medium or high. Current specs: Ryzen 5600, RX 6650 XT, 16GB RAM.

People say PC gaming is expensive. I say hobbies are expensive, PC gaming can be inexpensive. This is ~$150/year, that’s pretty affordable… And honestly, I could be running that OG PC from 2009 with just a second GPU upgrade for grand total of $800 over 15 years if all I wanted was to play games.

Almost exact same timeline, prices and specs here. Just went with the RX6600 instead after hardware became somewhat affordable again after crypto hype and COVID. Always bought the mid-lowend stuff of the then actual hardware, if upgraded wanted/needed. It’s good to read of non-highend stuff all the time though.

I only got the 6650 because it was on sale for $200 or something, I was actually looking for the 6600 but couldn’t find a reasonable deal.

I make enough now that I don’t need to be stingy on hardware, but I honestly don’t max the hardware I have so it just seems wasteful. I probably won’t upgrade until either my NAS dies or the next AMD socket comes out (or there’s a really good deal). I don’t care about RTX, VR kinda sucks on Linux AFAIK, and I think newer AAA games kinda suck.

I’ll upgrade if I can’t play something, but my midrange system is still fine. I’m expecting no upgrades for 3-5 more years.

put linux on that beast and it’ll keep running new games til 2030

I thought anon was the normie? The average person doesnt upgrade their PC every two years. The average person buys a PC and replaced it when nothing works anymore. Anon is the normie, they are the enthusiasts. Anon is not hanging with a group of people with matching ideologies.

Let’s just drop the word “normie” altogether.

The word is incredibly vague and fails to reflect the diversity of viewpoints and opinions. Everyone has their own perception of what is most common, so the definition varies wildly.

The word is incredibly vague

isnt that, the point?

It’s supposed to refer to “normal” people. an incredibly broad and vague selection of people, who are, rather indistinct.

Kind of, but in doing so it loses any significant meaning. Everyone interprets it as they see fit

These are PC gamers, their hobby revolves around computers.

It’s similar to how car enthusiasts might give you shit for driving a ten year old Camry, whereas most people won’t care.

Yeah, most people. Aka the “normies”.

Normies still have hobbies

The point that the thread OP is trying to make that a “normie” is someone who is not considered as passionate about a subject as, say, an enthusiast. So naturally, they will not be as prone to spend money and replace their gear very often.

I upgraded last year from i7-4700k to i7-12700k and from GTX 750Ti to RTX 3060Ti, because 8 threads and 2GB of vram was finally not enough for modern games. And my old machine still runs as a home server.

The jump was huge and I hope I’ll have money to upgrade sooner this time, but if needed I can totally see that my current machine will work just fine in 6-8 years.

I’m still rocking the 4790K. It’s been a damn good CPU.

My computer needs an upgrade now, but really what’s happening is I’m getting GPU bottlenecked, the CPU is still okay actually.

Upgrade the GPU, reveal the CPU bottleneck.

That’s where I was a couple years ago. Originally, I had an R9 290. Amazing card circa 2014, but its 4 GB of VRAM aged it pretty badly by 2020. Now I’ve got a 4070, which is way more than good enough for the 1080p60 that I run at. I’ll upgrade the rest of the PC to match the GPU a little better in the future, but for right now, I don’t need to. Except maybe for Stellaris.

But I just ripped a bunch of my old PS2 games to my PC because I felt like revisiting them. And my PS2 is toast. RIP, old friend. :(