See THIS POST

Notice- the 2,000 upvotes?

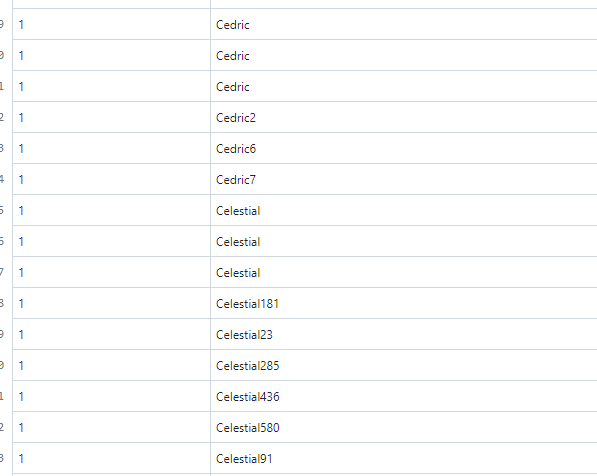

https://gist.github.com/XtremeOwnageDotCom/19422927a5225228c53517652847a76b

It’s mostly bot traffic.

Important Note

The OP of that post did admit, to purposely using bots for that demonstration.

I am not making this post, specifically for that post. Rather- we need to collectively organize, and find a method.

Defederation is a nuke from orbit approach, which WILL cause more harm then good, over the long run.

Having admins proactively monitor their content and communities helps- as does enabling new user approvals, captchas, email verification, etc. But, this does not solve the problem.

The REAL problem

But, the real problem- The fediverse is so open, there is NOTHING stopping dedicated bot owners and spammers from…

- Creating new instances for hosting bots, and then federating with other servers. (Everything can be fully automated to completely spin up a new instance, in UNDER 15 seconds)

- Hiring kids in africa and india to create accounts for 2 cents an hour. NEWS POST 1 POST TWO

- Lemmy is EXTREMELY trusting. For example, go look at the stats for my instance online… (lemmyonline.com) I can assure you, I don’t have 30k users and 1.2 million comments.

- There is no built-in “real-time” methods for admins via the UI to identify suspicious activity from their users, I am only able to fetch this data directly from the database. I don’t think it is even exposed through the rest api.

What can happen if we don’t identify a solution.

We know meta wants to infiltrate the fediverse. We know reddits wants the fediverse to fail.

If, a single user, with limited technical resources can manipulate that content, as was proven above-

What is going to happen when big-corpo wants to swing their fist around?

Edits

- Removed most of the images containing instances. Some of those issues have already been taken care of. As well, I don’t want to distract from the ACTUAL problem.

- Cleaned up post.

We need browser fingerprinting for this.

Browser fingerprints are easy enough to block or mimic, though, at least for the solutions I’ve messed with. JavaScript based solutions in particular are tricky because of the privacy implications and the fact that decent privacy focused browsers are starting to block those things automatically.

No.

Fingerprinting is against the goals of Lemmy and privacy. Lemmy should be for the good of people.

If anything there should be SOME centralization that allows other (known, somehow verified) instances to vote to allow/disallow spammy instances. In some way that couldn’t be abused. This may lead to a fork down the road (think BTC vs BCH) due to community disagreements but I don’t really see any other way this doesn’t become an absolute spamfest. As it stands now one server admin could spamfest their own server with their own spam, and once it starts federating EVERYONE gets flooded. This also easily creates a DoS of the system.

Asking instance admins to require CAPTCHA or whatever to defeat spam doesn’t work when the instance admins are the ones creating spam servers to spam the federation.

We are working on this currently. Stay tuned.

I would be careful using both of those words in the same sentence. They ONLY private thing on this entire platform, is your email address, and your IP. If you post, comment, or vote on a public instance- that data is sent to every other subscribing instances.

That being said- unless you volunteer information to lemmy, it doesn’t know who you are.

That also being said- I am against letting google handling data collection for lemmy.